Chapter 4 was about file geodatabases. I had no idea what these even were before (perhaps I wasn’t paying enough attention in GEOG 292), but now I get that they’re basically Esri’s way of organizing spatial data. I learned that you store all your feature classes, raster datasets, and other related files in a geodatabase. I guess it’s more efficient that way. The chapter showed how to import data into these geodatabases, modify tables (like adding and deleting columns – which I’m always a little nervous about, making sure I don’t delete something vital!), and even how to write little expressions to calculate fields. That was a cool connection back to some basic coding stuff. Joins were also covered, which is about linking tables together based on a common field. It’s like saying, “These two tables have a column in common, so stick them together!”

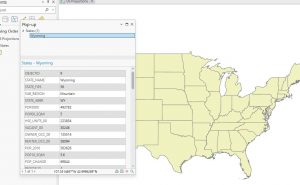

Chapter 5 was a lot. It was about different types of spatial data, and where to find them. It started with map projections, which are ways to show a round earth on a flat map. There are a bunch of different map projections for representing different things, all with trade offs, so you gotta choose the correct one. Then it went into projected coordinate systems, which are like grids laid over the map to make measuring distances easier. The chapter also talked about vector data formats (like shapefiles, which I’ve seen before) and, most importantly, where to get all this data. It turns out there are tons of sources, like the US Census Bureau. I never realized how much data the government collects and makes available. There was also a section on exploring sources of spatial data like ArcGIS Living Atlas.

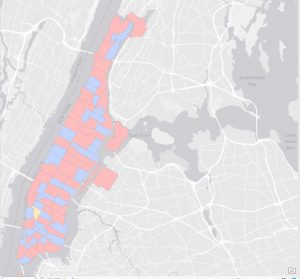

Chapter 6 was about doing stuff with data, which is where it gets fun (and sometimes frustrating!). It’s called “geoprocessing,” which is a fancy word for manipulating spatial data. The chapter covered a bunch of tools that let you extract parts of your data, combine layers, and do all sorts of useful things. One tool that stood out was “Dissolve,” which lets you merge polygons together based on a common attribute. For example if you have a map of city blocks, and you want to group them into neighborhoods – Dissolve can do that. Another tool was “Intersect,” which finds where features overlap. So, if you have streets and fire company zones, you can find which streets are covered by each company. I had a little trouble with some of the parameters, especially making sure I had the input and output layers right. Sometimes I felt like I was just clicking buttons and hoping for the best! But I eventually got it to work.

Chapter 7 was about creating your own spatial data, which is called “digitizing.” It’s basically tracing things on a map to create points, lines, or polygons. I was surprised at how much you could do with this. The chapter showed how to edit existing features, like moving buildings around or changing their shapes. You can even add and delete vertices (those little points that make up a polygon). There was also a section on using something called “procedural rules” to create 3D models, which looked really cool but also a bit intimidating. One thing that I got stuck on was the snapping. I kept forgetting to turn it on, and my lines weren’t connecting properly.

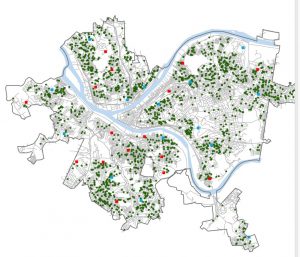

Chapter 8 was about geocoding, which is turning addresses into points on a map. It’s basically giving spatial context to location data. I learned that you need two things: a table with addresses (the “source table”) and a map with streets (the “reference data”). ArcGIS Pro then tries to match the addresses to the streets. The chapter went through the steps of building a “locator,” which is like a set of rules for geocoding. You have to tell it which fields in your table correspond to the address, city, state, and zip code. Then you run the geocoding tool, and it tries to find a match for each address. One thing that was emphasized was that geocoding is not perfect. It uses “fuzzy matching” because addresses can be messy (misspellings, abbreviations, etc.). So, you get a “match score” for each address, and you can set a threshold for what you consider a good match. I thought that was pretty smart, but it also means you have to double-check the results, especially if accuracy is super important.